Autofocus is an essential function for automated microscope where refocussing may be required as the field of view is changed during unsupervised imaging. Since multiwell plates are typically not flat, the amount of refocussing required acorss a multiwellplate can exceed 100 μm. Autofocus is also important for any imaging modalities requiring extended image data acquisition times, such as time-lapse microscopy or single molecule localisation microscopy. Any autofocus system comprises a method to determine the degree of defocus and an actuator to move either the sample or the objective lens to bring the microscope back into focus. Autofocus approaches can be categorised as software-based image-based autofocus techniques, which calculate the defocus of the microscope from metric of the normal microscope images, or hardware-based optical autofocus techniques, which utlise an additional optical system to determine the defocus, typically by measuring the distance to the microscope coverslip. Image-based autofocus techniques can interrupt the normal image acquisition of the microscope in order to determine and correct the defocus and may increase the light dose to the sample if additional images are required to restore focus. They work best with thin samples than do not extend beyond the depth of focus. Optical autofocus systems can run in parallel with the normal microscope image acquisition and typically measure the distance to the microscope coverslip using near infrared radiation that minmises any phototoxicity. They are almost sample-agnostic and so work well with extended samples.

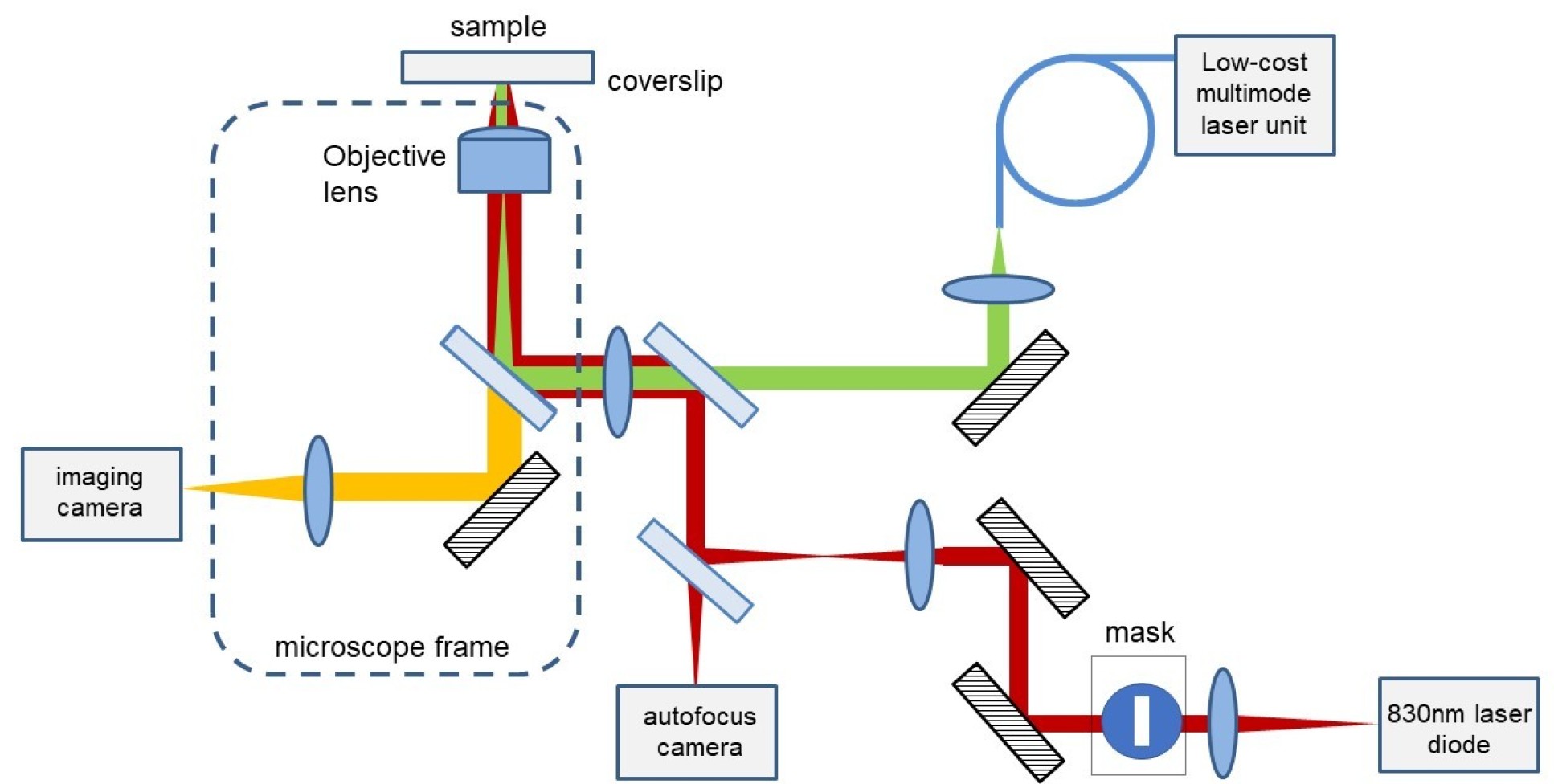

We have developed two optical autofocus modules for use with openFrame-based microscopes or other instruments. Our first implemention, which utilises machine learning to determine the amount of defocus, is illustrated in the figure below, for automated multiwell plate imaging that provides both long range (~+/–100 μm) and high precision (<~600 nm). It utilses an add-on module that focusses an NIR laser beam onto the microscope coverslip and calculates the degree of defocus from an image of the back-reflected beam on an ancilliary camera. The figure below depicts a configuration that is integrated with laser excitaiton for epifluorescence microscopy and easySTORM.

This approach uses a slit in the collimated autofocus laser beam to produce different confocal parameters in the autofocus laser beam focussed onto the coverslip. By analysing the image of the back reflected beam resolved parallel and perpendicular to the slit we can make high precision defocus measurements witin ~10 μm of the coverslip and lower precision defocus measurements over +/–100 μm from the coverslip.

One aim of the autofocus system is to correct defocus during the long (e.g. multiple hours) HCA image data acquistions that may arise due to thermal drift or relaxation of optical components. Unfortunately, the optical autofocus system itself can be subject to the same system drift and we developed an approach to correct for this using a convolutional neural network (CNN) to calculate the defocus from the autofocus camera images. This autofocus operates in a 2-step mode, first applying the CNN to the long-range training data to move close to focus and then applying the CNN to the higher precision short-range training data. For more information about this autofocus system, please see our Journal of Microscopy paper, where this autofocus system is applied to super-resolved HCA using easySTORM.

More recently, we have developed a second implentation of an optical autofocus module, "openAF" that does not require machine learning and can operate in single shot closed loop mode.