How can robots understand the actions of other agents (humans and other robots), infer their internal states and intentions, and build models of their behaviour to facilitate better interaction & collaboration? Our laboratory’s interdisciplinary research spans several areas, including human-centred computer vision, machine learning, multiscale user modelling, cognitive architectures, and shared control. We aim at advance fundamental theoretical concepts in these fields without ignoring the engineering challenges of the real world, so our experiments involve real robots, humans, and tasks.

Feel free to contact us if you have any queries, are interested in joining us as a student or a researcher, or have a great idea for scientific collaboration.

Research Themes

- Multimodal Perception of Human Actions and Inference of Internal Human States

- Multiscale Human Modelling & Human-in-the-Loop Digital Twins

- Skill Representation and Learning in Humans and Robots

- Motion Planning in Individual and Collaborative Tasks

- Mixed Reality for Human-Robot Interaction

- Explainability, Trust and Privacy in Human-Robot Interaction

- Robotic Caregivers and Physical Interaction

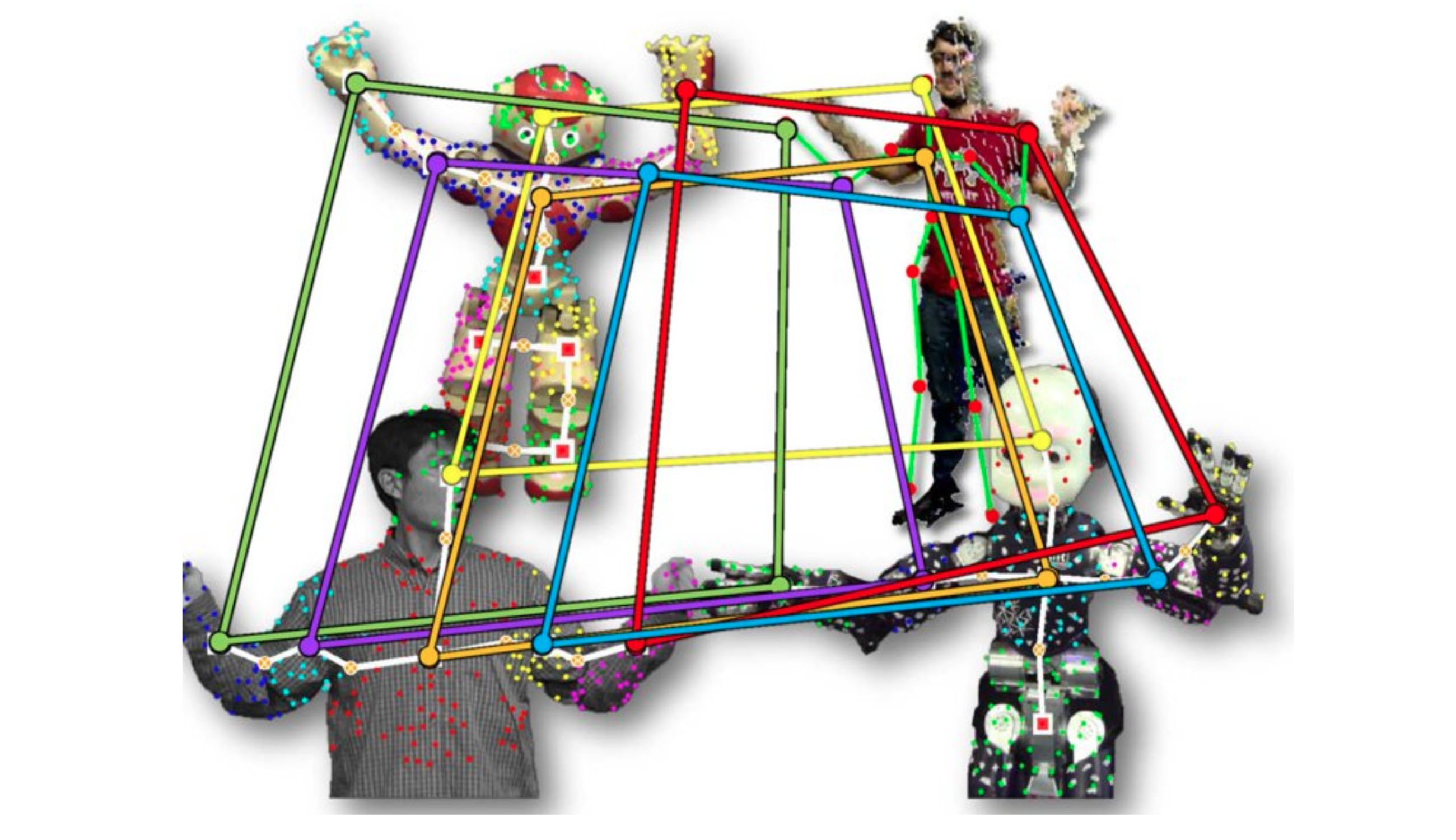

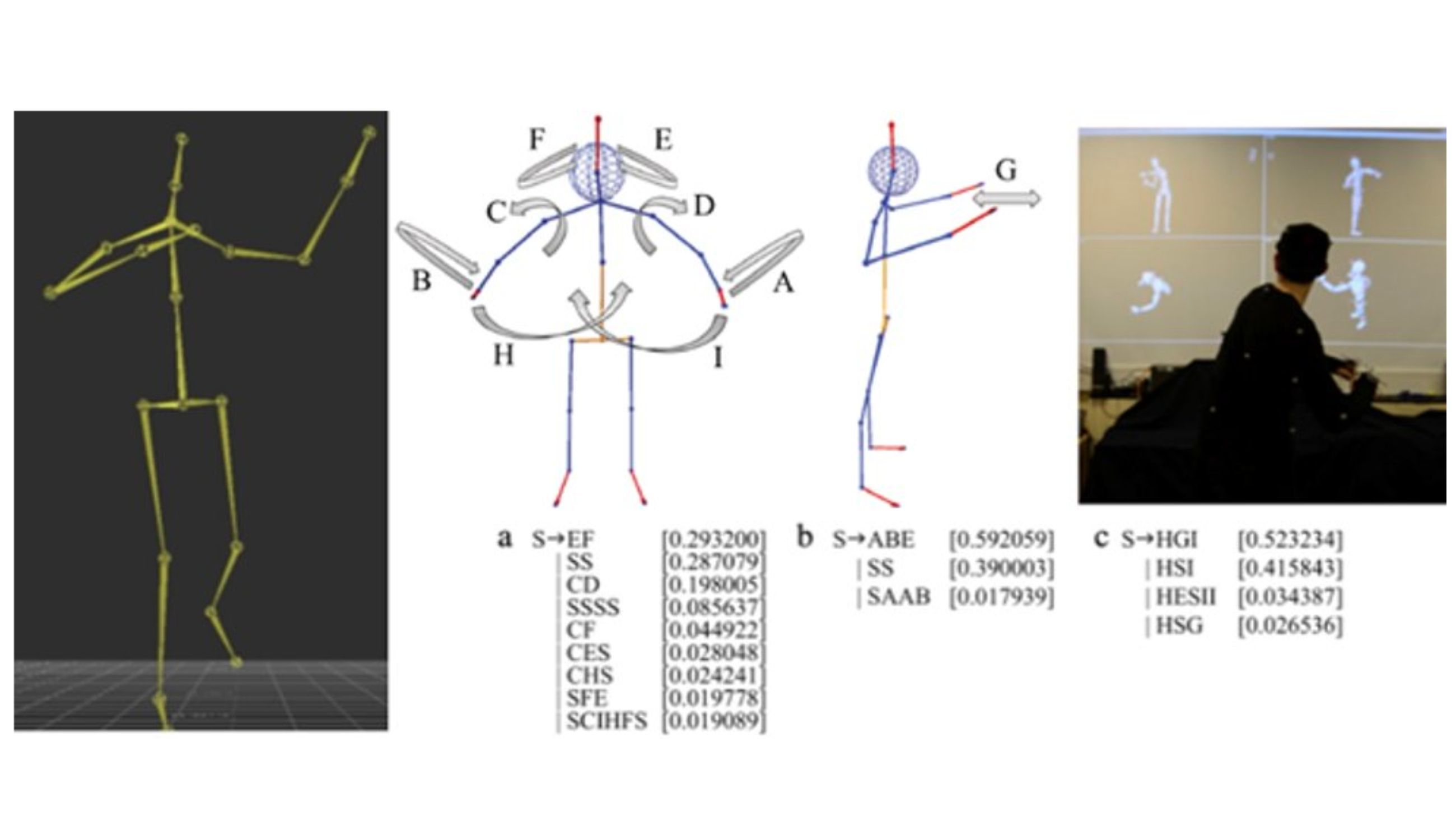

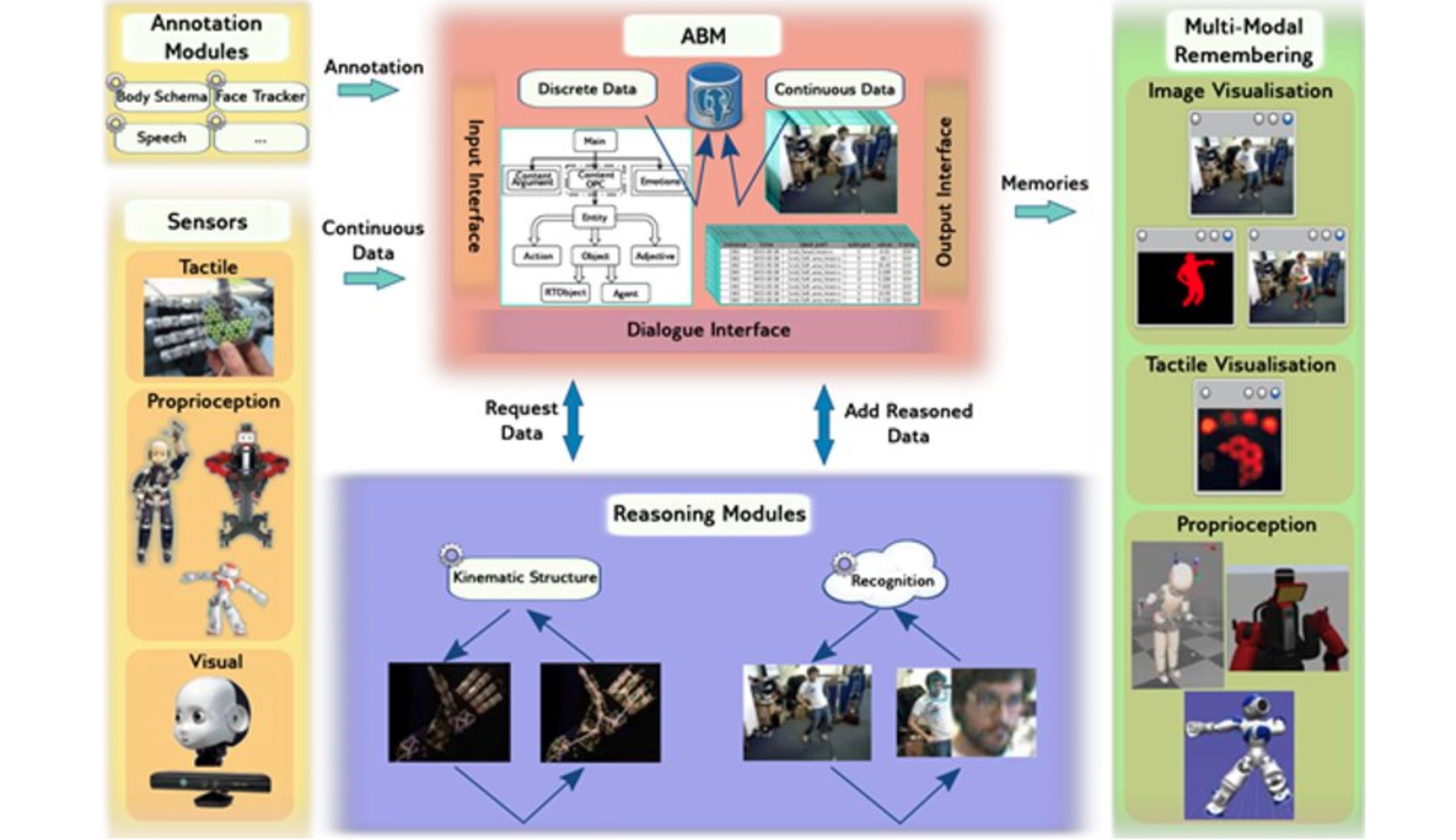

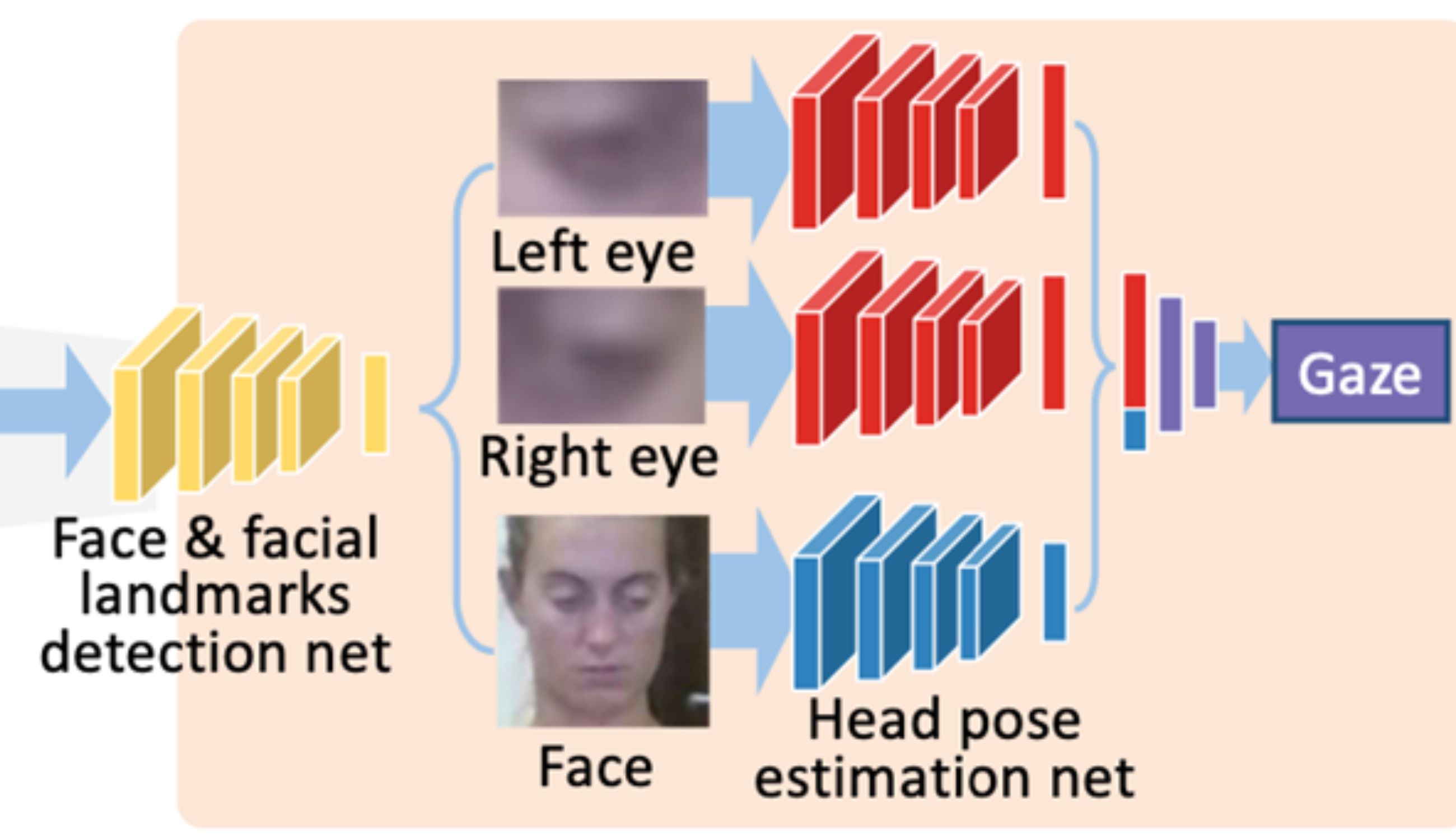

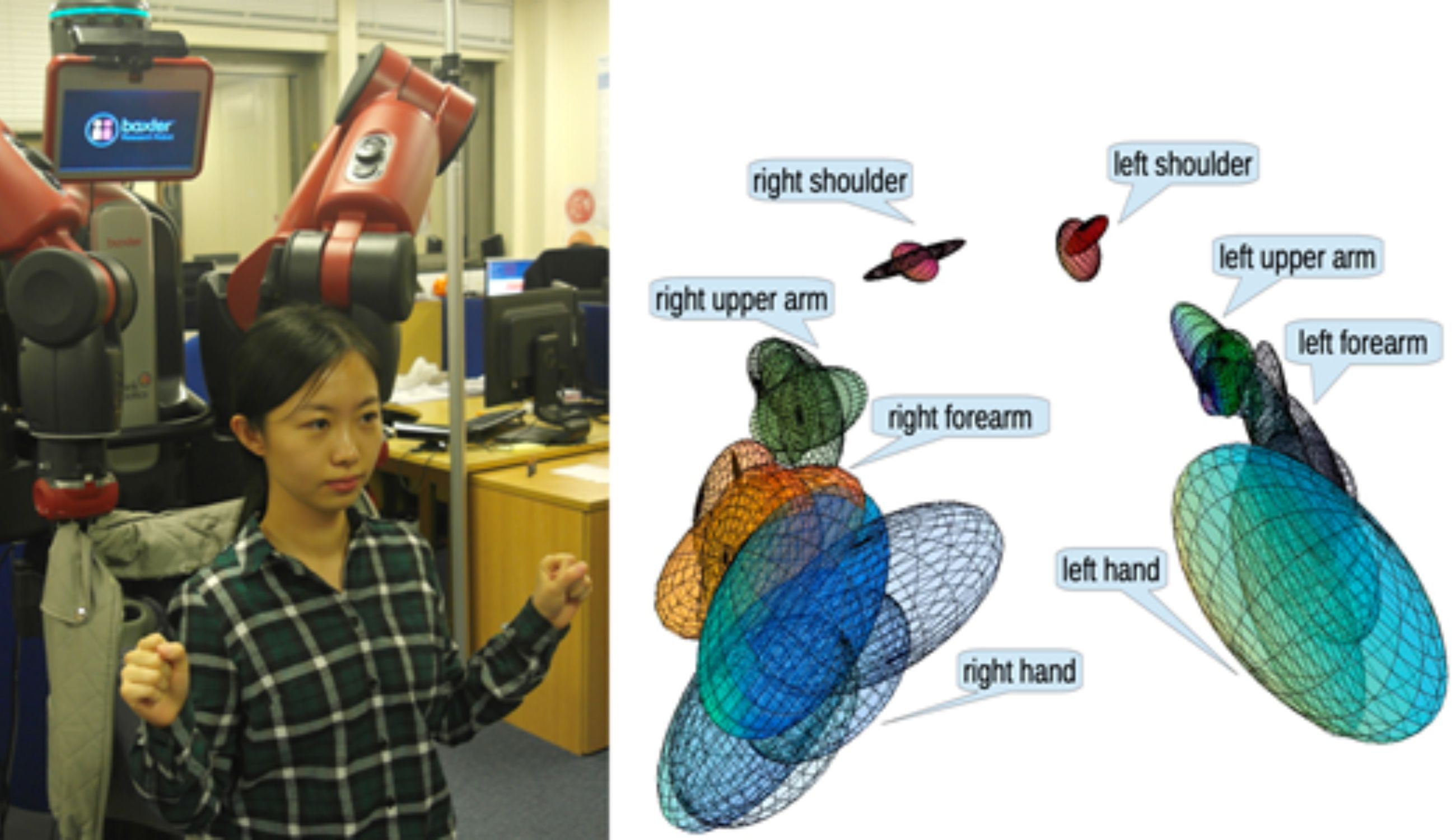

At the core of our research lies the ability of the robot system to perceive what humans are doing and infer what their internal cognitive states (including beliefs and intentions) are. We use multimodal signals (RGB-D, event-based (DVS), thermal signals, haptic and audio) to perform this inference. We research most pipeline steps of human action perception (eye tracking, pose estimation and tracking, human motion analysis, and action segmentation). We collect and publish our datasets for the community’s benefit (see section Software on this website).

Key Publications:

- X. Zhang, P. Angeloudis and Y. Demiris, 2022, ST CrossingPose: A Spatial-Temporal Graph Convolutional Network for Skeleton-Based Pedestrian Crossing Intention Prediction, in IEEE Transactions on Intelligent Transportation Systems, vol. 23, no. 11, pp. 20773-20782.

- H. Razali and Y. Demiris, 2022, Using Eye Gaze to Forecast Human Pose in Everyday Pick and Place Actions, 2022 International Conference on Robotics and Automation (ICRA), pp. 8497-8503.

- Y. Jang and Y. Demiris, 2022, Message Passing Framework for Vision Prediction Stability in Human Robot Interaction, 2022 International Conference on Robotics and Automation (ICRA), pp. 8726-8733.

- U. M. Nunes and Y. Demiris, 2022, Kinematic Structure Estimation of Arbitrary Articulated Rigid Objects for Event Cameras, 2022 International Conference on Robotics and Automation (ICRA), pp. 508-514.

- U. M. Nunes and Y. Demiris, 2021, Robust Event-Based Vision Model Estimation by Dispersion Minimisation, in IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 44, no. 12, pp. 9561-9573.

- Nunes, U. M., & Demiris, Y., 2020, Entropy Minimisation Framework for Event-Based Vision Model Estimation, in European Conference on Computer Vision, pp. 161-176.

- V. Schettino and Y. Demiris, 2019, Inference of user-intention in remote robot wheelchair assistance using multimodal interfaces, 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 4600-4606.

- Buizza C, Fischer T, Demiris Y, 2019, Real-Time Multi-Person Pose Tracking using Data Assimilation, IEEE Winter Conference on Applications of Computer Vision.

- Fischer T, Chang HJ, Demiris Y, 2018, RT-GENE: Real-Time Eye Gaze Estimation in Natural Environments, Proceedings of the European Conference on Computer Vision, pp:339-357

- Chang HJ, Fischer T, Petit M, Zambelli M, Demiris Y, 2018, Learning Kinematic Structure Correspondences Using Multi-Order Similarities, IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 40, no. 12, pp:2920-2934.

- Choi, J., Chang, H. J., Fischer, T., Yun, S., Lee, K., Jeong, J., ... & Choi, J. Y., 2018, Context-Aware Deep Feature Compression for High-Speed Visual Tracking, in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 479-488.

- Nunes, U. M., & Demiris, Y., 2018, 3D motion segmentation of articulated rigid bodies based on RGB-D data, in BMVC, Vol. 6, No. 7, p. 19.

- Choi, J., Jin Chang, H., Yun, S., Fischer, T., Demiris, Y., & Young Choi, J, 2017, Attentional Correlation Filter Network for Adaptive Visual Tracking, in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 4807-4816.

- Chang HJ, Fischer T, Petit M, Zambelli M, Demiris Y, 2016, Kinematic Structure Correspondences via Hypergraph Matching, IEEE Conference on Computer Vision and Pattern Recognition, pp:4216-4425.

- Choi, J., Chang, H. J., Jeong, J., Demiris, Y., & Choi, J. Y., 2016, Visual Tracking Using Attention-Modulated Disintegration and Integration, in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 4321-4330.

- Chang HJ, Demiris Y, 2015, Unsupervised Learning of Complex Articulated Kinematic Structures combining Motion and Skeleton Information, IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp:3138-3146

We are interested in learning and maintaining multiscale human models to personalise the contextual and temporal appropriateness of the assistance our robots provide – “how should we help this person, and when”. We typically represent humans at multiple levels of abstraction: from how they move, i.e., their spatiotemporal trajectory representations using statistical and neural network representations, to how they solve tasks, i.e., their action sequences using context-free grammatical representations, and how they use assistive equipment. Thus, we term our models “multiscale” models.

Key Publications:

- Y. Zhong and Y. Demiris, 2024, DanceMVP: Self-Supervised Learning for Multi-Task Primitive-Based Dance Performance Assessment via Transformer Text Prompting, in Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 38, No. 9, pp. 10270-10278.

- Y. Zhong, F. Zhang and Y. Demiris, 2023, Contrastive Self-Supervised Learning for Automated Multi-Modal Dance Performance Assessment in ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 1-5.

- Y. Gao, H. J. Chang and Y. Demiris, 2020, User Modelling Using Multimodal Information for Personalised Dressing Assistance, in IEEE Access, vol. 8, pp. 45700-45714.

- F. Zhang, A. Cully and Y. Demiris, 2019, Probabilistic Real-Time User Posture Tracking for Personalized Robot-Assisted Dressing, in IEEE Transactions on Robotics, vol. 35, no. 4, pp. 873-888.

- A. Cully and Y. Demiris, 2019, Online Knowledge Level Tracking with Data-Driven Student Models and Collaborative Filtering, in IEEE Transactions on Knowledge and Data Engineering, vol. 32, no. 10, pp. 2000-2013.

- F. Zhang, A. Cully and Y. Demiris, 2017, Personalized robot-assisted dressing using user modeling in latent spaces, 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 3603-3610.

- Georgiou, T., & Demiris, Y., 2017., Adaptive user modelling in car racing games using behavioural and physiological data, User Modeling and User-Adapted Interaction, 27(2), 267-311.

- Yixing Gao, Hyung Jin Chang and Y. Demiris, 2015, User modelling for personalised dressing assistance by humanoid robots, 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 1840-1845.

- Lee, K., Su, Y., Kim, T. K., & Demiris, Y., 2013, A syntactic approach to robot imitation learning using probabilistic activity grammars, Robotics and Autonomous Systems, 61(12), 1323-1334.

We are researching the learning processes that allow humans and robots to acquire and represent sensorimotor skills. Our research spans several representational paradigms: from embodiment-oriented statistical and neural representations, to more cognition-oriented ontological and knowledge-graph-based representations. We use active learning (motor and goal babbling and exploration) and social learning (e.g. human observation and imitation) to expand the range of skills our robots have.

Key Publications:

- Zhang, F., & Demiris, Y, 2022, Learning garment manipulation policies toward robot-assisted dressing, Science robotics, 7(65), eabm6010.

- H. Razali and Y. Demiris, 2021, Multitask Variational Autoencoding of Human-to-Human Object Handover, 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 7315-7320.

-

Zambelli, M., Cully, A., & Demiris, Y, 2020, Multimodal representation models for prediction and control from partial information, Robotics and Autonomous Systems, 123, 103312.

- Korkinof, D., & Demiris, Y, 2017, Multi-task and multi-kernel Gaussian process dynamical systems, Pattern Recognition, 66, 190-201.

- M. Petit, T. Fischer and Y. Demiris, 2016, Lifelong Augmentation of Multimodal Streaming Autobiographical Memories, in IEEE Transactions on Cognitive and Developmental Systems, vol. 8, no. 3, pp. 201-213.

- A. Ribes, J. Cerquides, Y. Demiris and R. Lopez de Mantaras, 2016, Active Learning of Object and Body Models with Time Constraints on a Humanoid Robot, in IEEE Transactions on Cognitive and Developmental Systems, vol. 8, no. 1, pp. 26-41.

- H. Soh and Y. Demiris, 2015, Spatio-Temporal Learning With the Online Finite and Infinite Echo-State Gaussian Processes, in IEEE Transactions on Neural Networks and Learning Systems, vol. 26, no. 3, pp. 522-536.

- H. Soh and Y. Demiris, 2014, Incrementally Learning Objects by Touch: Online Discriminative and Generative Models for Tactile-Based Recognition, in IEEE Transactions on Haptics, vol. 7, no. 4, pp. 512-525.

- Wu, Y., Su, Y., & Demiris, Y., 2014, A morphable template framework for robot learning by demonstration: Integrating one-shot and incremental learning approaches, Robotics and Autonomous Systems, 62(10), 1517-1530.

How can robots plan and execute actions in an optimal, safe and trustworthy manner? We research robot motion generation algorithms in individual and collaborative tasks, paying particular attention to how control can be shared between human and robot collaborators. Apart from fundamental issues in human-robot shared/collaborative control, we are also interested in the interaction between multiple humans and robots, for example in triadic interactions (for example, an assistive robot, an assisted person and their carer)

Key Publications:

- F. Zhang and Y. Demiris, 2022, Learning garment manipulation policies toward robot-assisted dressing, in Sci. Robot. 7, eabm6010

- V. Girbés-Juan, V. Schettino, L. Gracia et al., 2022, Combining haptics and inertial motion capture to enhance remote control of a dual-arm robot, in J Multimodal User Interfaces 16, 219–238

- V. Girbés-Juan, V. Schettino, Y. Demiris and J. Tornero, 2021, Haptic and Visual Feedback Assistance for Dual-Arm Robot Teleoperation in Surface Conditioning Tasks, in IEEE Transactions on Haptics, vol. 14, no. 1, pp. 44-56, 1 Jan.-March 2021

- T. Fischer, J. Y. Puigbò, D. Camilleri,P. D. H. Nguyen,C. Moulin-Frier, S. Lallée, G. Metta, T. J. Prescott, Y. Demiris andP. F. M. J. Verschure, 2018, iCub-HRI: A Software Framework for Complex Human–Robot Interaction Scenarios on the iCub Humanoid Robot, in Front. Robot. AI 5:22

- V. Schettino and Y. Demiris, 2020, Improving Generalisation in Learning Assistance by Demonstration for Smart Wheelchairs, in IEEE International Conference on Robotics and Automation (ICRA), pp. 5474-5480

- A. Kucukyilmaz and Y. Demiris, 2018, Learning Shared Control by Demonstration for Personalized Wheelchair Assistance, in IEEE Transactions on Haptics, vol. 11, no. 3, pp. 431-442

- H. Soh and Y. Demiris, Learning assistance by demonstration: smart mobility with shared control and paired haptic controllers, in J. Hum.-Robot Interact. 4, 3 (December 2015), 76–100.

- Y. Su, Y. Wu, H. Soh, Z. Du and Y. Demiris, 2013, Enhanced kinematic model for dexterous manipulation with an underactuated hand, in IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 2493-2499

Virtual and Augmented Reality Interfaces have great potential for enhancing the interaction between humans and complex robot systems. Our research investigates how visualising and interacting with mixed reality information (for example dynamic signifiers, or dynamically- determined affordances) can facilitate human collaboration through enhanced explainability, and fluidity and efficiency of control.

KEY PUBLICATIONS:

- R. Chacón-Quesada and Y. Demiris, 2022, Proactive Robot Assistance: Affordance-Aware Augmented Reality User Interfaces in IEEE Robotics & Automation Magazine, vol. 29, no. 1, pp. 22-34

- R. Chacón-Quesada and Y. Demiris, 2020, Augmented Reality User Interfaces for Heterogeneous Multirobot Control, in IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 11439-11444

- M. Zolotas and Y. Demiris, 2019, Towards Explainable Shared Control using Augmented Reality, in IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 3020-3026

- R. Chacón-Quesada and Y. Demiris, 2019, Augmented Reality Controlled Smart Wheelchair Using Dynamic Signifiers for Affordance Representation, in IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 4812-4818

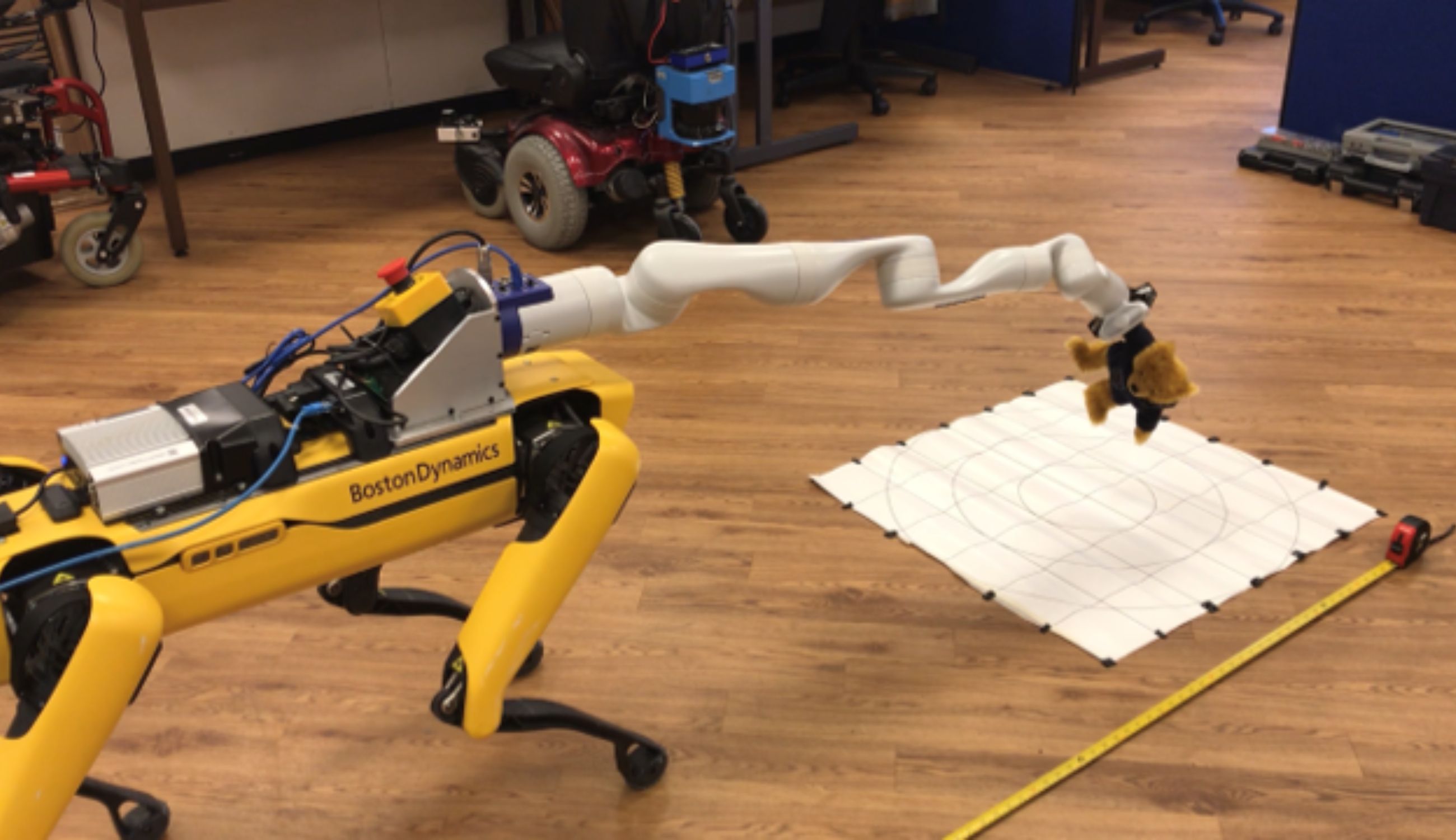

- J. Elsdon and Y. Demiris, 2018, Augmented Reality for Feedback in a Shared Control Spraying Task, in IEEE International Conference on Robotics and Automation (ICRA), pp. 1939-1946

- J. Elsdon and Y. Demiris, 2017, Assisted painting of 3D structures using shared control with a hand-held robot, in IEEE International Conference on Robotics and Automation (ICRA), pp. 4891-4897

As robots become more integrated into our lives, it's crucial they understand and respect privacy and trust. We focus on three key areas: 1) enabling robots to gauge human trust and adapt their behaviour accordingly, 2) designing robots with clear and understandable decision-making processes, and 3) ensuring they learn personalised behaviours without compromising user privacy.

KEY PUBLICATIONS:

- C. Goubard and Y. Demiris, 2024, Learning Self-Confidence from Semantic Action Embeddings for Improved Trust in Human-Robot Interaction in 2024 IEEE International Conference on Robotics and Automation (ICRA).

- F. Estevez Casado and Y. Demiris, 2022, Federated learning from demonstration for active assistance to smart wheelchair user in 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 9326-9331.

Caregiving robotics, as a subset of assistive robotics, are developed to provide support for care-related tasks for elderly individuals and people with mobility impairments, aiming to improve the quality of their lives and independence while also reducing the workload on human caregivers.

KEY PUBLICATIONS:

- Y. Gu and Y. Demiris, 2024, VTTB: A Visuo-Tactile Learning Approach for Robot-Assisted Bed Bathing, in IEEE Robotics and Automation Letters, vol. 9, no. 6, pp. 5751-5758

- S. Kotsovolis and Y. Demiris, 2024, Model Predictive Control with Graph Dynamics for Garment Opening

Insertion during Robot-Assisted Dressing, in 2024 IEEE International Conference on Robotics and Automation (ICRA). - S. Kotsovolis and Y. Demiris, 2023, Bi-Manual Manipulation of Multi-Component Garments towards Robot-Assisted Dressing, in 2023 IEEE International Conference on Robotics and Automation (ICRA), pp. 9865-9871.

- Zhang, F., & Demiris, Y, 2022, Learning garment manipulation policies toward robot-assisted dressing, Science robotics, 7(65), eabm6010.

- Y. Gao, H. J. Chang and Y. Demiris, 2020, User Modelling Using Multimodal Information for Personalised Dressing Assistance, in IEEE Access, vol. 8, pp. 45700-45714.

- F. Zhang, A. Cully and Y. Demiris, 2019, Probabilistic Real-Time User Posture Tracking for Personalized Robot-Assisted Dressing, in IEEE Transactions on Robotics, vol. 35, no. 4, pp. 873-888.

- F. Zhang, A. Cully and Y. Demiris, 2017, Personalized robot-assisted dressing using user modeling in latent spaces, 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 3603-3610.