Main content blocks

About us

The MIM Lab develops robotic and mechatronics surgical systems for a variety of procedures.

-

Twitter/X: @MIMLab_Robotics

-

Youtube: Mechatronics in Medicine Lab

Research lab info

What we do

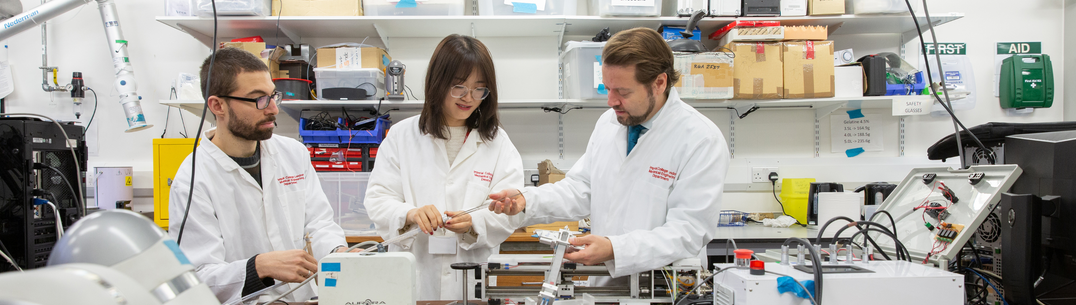

The Mechatronics in Medicine Laboratory develops robotic and mechatronics surgical systems for a variety of procedures including neuro, cardiovascular, orthopaedic surgeries, and colonoscopies. Examples include bio-inspired catheters that can navigate along complex paths within the brain (such as EDEN2020), soft robots to explore endoluminal anatomies (such as the colon), and virtual reality solutions to support surgeons during knee replacement surgeries.

Why it is important?

...

How can it benefit patients?

......

Meet the team

Results

- Showing results for:

- Reset all filters

Search results

-

Conference paperKoenig A, Rodriguez y Baena F, Secoli R, 2021,

Gesture-Based Teleoperated Grasping for Educational Robotics

, 30th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Publisher: IEEE, Pages: 222-228, ISSN: 1944-9445- Author Web Link

- Cite

- Citations: 6

-

Journal articleHu X, Rodriguez y Baena F, Cutolo F, 2020,

Alignment-free offline calibration of commercial optical see-through head-mounted displays with simplified procedures

, IEEE Access, Vol: 8, Pages: 223661-223674, ISSN: 2169-3536Despite the growing availability of self-contained augmented reality head-mounted displays (AR HMDs) based on optical see-through (OST) technology, their potential applications across highly challenging medical and industrial settings are still hampered by the complexity of the display calibration required to ensure the locational coherence between the real and virtual elements. The calibration of commercial OST displays remains an open challenge due to the inaccessibility of the user’s perspective and the limited hardware information available to the end-user. State-of-the-art calibrations usually comprise both offline and online stages. The offline calibration at a generic viewpoint provides a starting point for the subsequent refinements and it is crucial. Current offline calibration methods either heavily rely on the user-alignment or require complicated hardware calibrations, making the overall procedure subjective and/or tedious. To address this problem, in this work we propose two fully alignment-free calibration methods with less complicated hardware calibration procedures compared with state-of-the-art solutions. The first method employs an eye-replacement camera to compute the rendering camera’s projection matrix based on photogrammetry techniques. The second method controls the rendered object position in a tracked 3D space to compensate for the parallax-related misalignment for a generic viewpoint. Both methods have been tested on Microsoft HoloLens 1. Quantitative results show that the average overlay misalignment is fewer than 4 pixels (around 1.5 mm or 9 arcmin) when the target stays within arm’s reach. The achieved misalignment is much lower than the HoloLens default interpupillary distance (IPD)-based correction, and equivalent but with lower variance than the Single Point Active Alignment Method (SPAAM)-based calibration. The two proposed methods offer strengths in complementary aspects and can be chosen according to the user&rsqu

-

Journal articleMatheson E, Baena FRY, 2020,

Biologically Inspired Surgical Needle Steering: Technology and Application of the Programmable Bevel-Tip Needle

, BIOMIMETICS, Vol: 5- Author Web Link

- Cite

- Citations: 7

-

Journal articleDenham TLDO, Cleary K, Baena FRY, et al., 2020,

Guest editorial medical robotics: surgery and beyond

, IEEE Transactions on Medical Robotics and Bionics, Vol: 2, Pages: 509-510, ISSN: 2576-3202The IEEE Transactions on Medical Robotics and Bionics (T-MRB) is an initiative shared by the two IEEE Societies of Robotics and Automation—RAS—and Engineering in Medicine and Biology—EMBS.

-

Journal articleLaws SG, Souipas S, Davies BL, et al., 2020,

Toward Automated Tissue Classification for Markerless Orthopaedic Robotic Assistance

, IEEE TRANSACTIONS ON MEDICAL ROBOTICS AND BIONICS, Vol: 2, Pages: 537-540- Author Web Link

- Cite

- Citations: 4

-

Journal articleTerzano M, Dini D, Rodriguez y Baena F, et al., 2020,

An adaptive finite element model for steerable needles

, Biomechanics and Modeling in Mechanobiology, Vol: 19, Pages: 1809-1825, ISSN: 1617-7940Penetration of a flexible and steerable needle into a soft target material is a complex problem to be modelled, involving several mechanical challenges. In the present paper, an adaptive finite element algorithm is developed to simulate the penetration of a steerable needle in brain-like gelatine material, where the penetration path is not predetermined. The geometry of the needle tip induces asymmetric tractions along the tool–substrate frictional interfaces, generating a bending action on the needle in addition to combined normal and shear loading in the region where fracture takes place during penetration. The fracture process is described by a cohesive zone model, and the direction of crack propagation is determined by the distribution of strain energy density in the tissue surrounding the tip. Simulation results of deep needle penetration for a programmable bevel-tip needle design, where steering can be controlled by changing the offset between interlocked needle segments, are mainly discussed in terms of penetration force versus displacement along with a detailed description of the needle tip trajectories. It is shown that such results are strongly dependent on the relative stiffness of needle and tissue and on the tip offset. The simulated relationship between programmable bevel offset and needle curvature is found to be approximately linear, confirming empirical results derived experimentally in a previous work. The proposed model enables a detailed analysis of the tool–tissue interactions during needle penetration, providing a reliable means to optimise the design of surgical catheters and aid pre-operative planning.

-

Journal articleFavaro A, Secoli R, Rodriguez y Baena F, et al., 2020,

Model-Based Robust Pose Estimation for a Multi-Segment, Programmable Bevel-Tip Steerable Needle

, IEEE ROBOTICS AND AUTOMATION LETTERS, Vol: 5, Pages: 6780-6787, ISSN: 2377-3766- Cite

- Citations: 12

-

Conference paperTatti F, Iqbal H, Jaramaz B, et al., 2020,

A novel computer-assisted workflow for treatment of osteochondral lesions in the knee

, CAOS 2020. The 20th Annual Meeting of the International Society for Computer Assisted Orthopaedic Surgery, Publisher: EasyChair, Pages: 250-253Computer-Assisted Orthopaedic Surgery (CAOS) is now becoming more prevalent, especially in knee arthroplasty. CAOS systems have the potential to improve the accuracy and repeatability of surgical procedures by means of digital preoperative planning and intraoperative tracking of the patient and surgical instruments.One area where the accuracy and repeatability of computer-assisted interventions could prove especially beneficial is the treatment of osteochondral defects (OCD). OCDs represent a common problem in the patient population, and are often a cause of pain and discomfort. The use of synthetic implants is a valid option for patients who cannot be treated with regenerative methods, but the outcome can be negatively impacted by incorrect positioning of the implant and lack of congruency with the surrounding anatomy.In this paper, we present a novel computer-assisted surgical workflow for the treatment of osteochondral defects. The software we developed automatically selects the implant that most closely matches the patient’s anatomy and computes the best pose. By combining this software with the existing capabilities of the Navio™ surgical system (Smith & Nephew inc.), we were able to create a complete workflow that incorporates both surgical planning and assisted bone preparation.Our preliminary testing on plastic bone models was successful and demonstrated that the workflow can be used to select and position an appropriate implant for a given defect.

-

Journal articleTan Z, Ewen J, Galvan S, et al., 2020,

What does a brain feel like?

, Journal of Chemical Education, Vol: 97, Pages: 4078-4083, ISSN: 0021-9584We present a two-part hands-on science outreach demonstration utilizing composite hydrogels to produce realistic models of the human brain. The blends of poly(vinyl alcohol) and Phytagel closely match the mechanical properties of real brain tissue under conditions representative of surgical operations. The composite hydrogel is simple to prepare, biocompatible, and nontoxic, and the required materials are widely available and inexpensive. The first part of the demonstration gives participants the opportunity to feel how soft and deformable our brains are. The second part allows students to perform a mock brain surgery on a simulated tumor. The demonstration tools are suitable for public engagement activities as well as for various student training groups. The activities encompass concepts in polymer chemistry, materials science, and biology.

-

Journal articleKhan F, Donder A, Galvan S, et al., 2020,

Pose Measurement of Flexible Medical Instruments Using Fiber Bragg Gratings in Multi-Core Fiber

, IEEE SENSORS JOURNAL, Vol: 20, Pages: 10955-10962, ISSN: 1530-437X- Cite

- Citations: 26

This data is extracted from the Web of Science and reproduced under a licence from Thomson Reuters. You may not copy or re-distribute this data in whole or in part without the written consent of the Science business of Thomson Reuters.

Contact Us

General enquiries

hamlyn@imperial.ac.uk

Facility enquiries

hamlyn.facility@imperial.ac.uk

The Hamlyn Centre

Bessemer Building

South Kensington Campus

Imperial College

London, SW7 2AZ

Map location